Difference between revisions of "Node Architecture For Enterprise"

| (14 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | This page describes the overall technology stack used within | + | This page describes the overall technology stack used within AccountIT, and to some degree also within the supporting business systems. |

= Overview = | = Overview = | ||

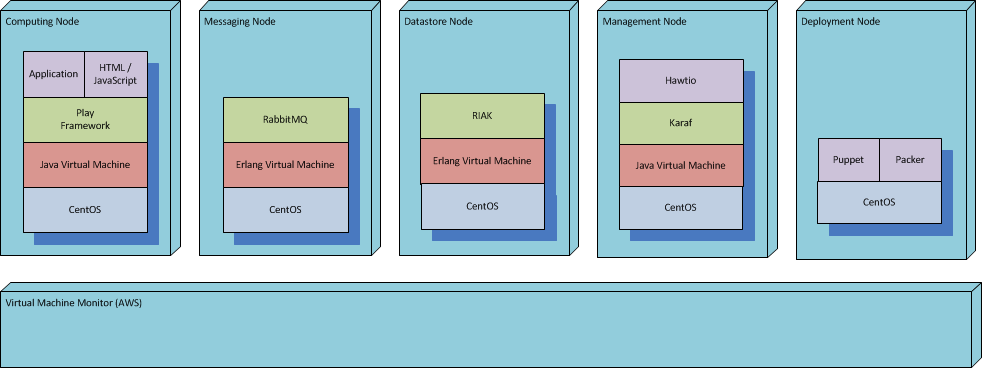

| − | The diagram below gives an overview of the | + | The diagram below gives an overview of the technologies used in the different nodes. |

| + | |||

[[File:Node.png]] | [[File:Node.png]] | ||

| Line 12: | Line 13: | ||

We want to have has few technologies as possible, yet for each problem we want to have the best possible technology. | We want to have has few technologies as possible, yet for each problem we want to have the best possible technology. | ||

| − | + | * We want few technologies, because the fewer we have, the easier they are to master. At the same time we want the best technologies, because they solve our problems in the most efficient way. | |

| + | * We should never have two different technologies for solving the same problem. | ||

| − | + | = Cloud = | |

| + | The entire enterprise IT, i.e. both AccountIT as well as Internal systems will be run "in the cloud". The primary reason for this is the lack of own data-center where the systems can be hosted. | ||

| + | As cloud data-centers are lease based, it is essential that the all systems (AccountIT as well as business systems) must be horizontally scalable ("elastic scaling") such that the systems can "scale down" i.e. reduce the use of "HW" resources like CPU, memory, hard-disk and network. | ||

| + | Scaling must be automatic to provide with a "24 / 7" illusion to the user, while keeping the cost of leasing the "HW" resources is kept to a minimum. | ||

| + | "HW" in this context is virtual as it is provided by a "Cloud provider" like Amazon WebServices. | ||

= Play Framework = | = Play Framework = | ||

| − | + | The AccountIT application will be based on the Play Framework. Play provides a UI framework that has support for HTML5 and JavaScript making it possible to provide a modern web-application feel. | |

| − | The | + | The framework provides a developer friendly environment making it easy to develop and test - this is the primary reason for choosing this framework. |

| − | + | Development can be done in both Java and Scala - giving possibility to mix Object Oriented with Functional Programming. | |

| − | + | ||

= Messaging = | = Messaging = | ||

| + | Messaging is used to build a asynchronous system based on the CQRS pattern. The commands represent update requests, while query represent search requests. | ||

| + | RabbitMQ will also be used to integrate between AccuntIT and supporting business systems (such as CRM, ERP, support and sales systems). But also between supporting business systems. | ||

| − | + | = In memory data-grid = | |

| − | + | Caching mechanism have been used in almost any component of a system to hold configuration data used by the component. E.g. a web-application may cache static information to reduce response time. | |

| − | + | To support clustered caching, in memory data-grid have been developed, providing with the benefits of caching distributed to all members of a cluster. | |

= Datastore = | = Datastore = | ||

| + | Datastore have traditionally been implemented using relational databases (RDBMS), but these have traditionally lacked the support for horizontal scalability, and thus we shall use NoSQL datastores. But new datastore solutions seems to bridge the gap - e.g. [http://www.voltdb.com VoltDB], which also provides "in-memory database" capabilities. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

= Monitoring = | = Monitoring = | ||

| − | |||

With a system landscape consisting of nodes in clusters monitoring occurs at two levels: | With a system landscape consisting of nodes in clusters monitoring occurs at two levels: | ||

| − | + | * At node level with each node exposing monitoring information through a monitoring agent. A surveillance / monitoring tool used by IT operations can collect the information provided by the monitoring agent | |

| − | + | * An aggregated view of the cluster of nodes, displaying the overall state of the cluster of some node. The monitoring aggreate is hosted by the "Management Node" | |

| + | |||

| + | The external interface of a monitoring agent is provided by http://jolokia.org, which exposes JMX MBean information via a JSON over HTTP. Jolokia is packaged within Hawtio, which additionally provides a Web UI to the JMX MBean information that jolokia provides. Thus each node as well as the aggreated information is available via a web-browser through Hawtio. | ||

| − | + | For non-java applications like RabbitMQ and RIAK, a REST interface will be required, such that the Management node can request state of the node, and produce an aggregated result that can be provided to a monitoring system. | |

| − | |||

= Deployment = | = Deployment = | ||

| + | With cloud hosting the deployment has to be automated, as spawning a new instance and deploying the system is done via a API. There are two distinct strategies regarding deployment: | ||

| + | * deployment system using an agent to deploy on the particular instance. Puppet is an example of this, where an image with the bare-bone OS and Puppet agent are deployed. When the Puppet agent starts it will look-up the Puppet master and request for its node configuration. The Puppet agent would periodically check with the Puppet master of its configuration and based on this update the node setup. | ||

| + | * deployment of an image with the complete system. Packer is an example of this, where an image is "baked" with all the required components. The image is spawned when a node is requested from the cloud system. | ||

| + | Each of the two have pros and cons, and the choice will reflect the priority these have. | ||

| − | + | [[Category:Enterprise]] | |

| − | + | [[Category:Technology]] | |

| − | + | ||

| − | + | ||

| − | + | ||

Latest revision as of 20:01, 12 May 2014

This page describes the overall technology stack used within AccountIT, and to some degree also within the supporting business systems.

Contents

Overview

The diagram below gives an overview of the technologies used in the different nodes.

Guiding Principles

The main guiding principle, for choosing technologies is that:

We want to have has few technologies as possible, yet for each problem we want to have the best possible technology.

- We want few technologies, because the fewer we have, the easier they are to master. At the same time we want the best technologies, because they solve our problems in the most efficient way.

- We should never have two different technologies for solving the same problem.

Cloud

The entire enterprise IT, i.e. both AccountIT as well as Internal systems will be run "in the cloud". The primary reason for this is the lack of own data-center where the systems can be hosted. As cloud data-centers are lease based, it is essential that the all systems (AccountIT as well as business systems) must be horizontally scalable ("elastic scaling") such that the systems can "scale down" i.e. reduce the use of "HW" resources like CPU, memory, hard-disk and network. Scaling must be automatic to provide with a "24 / 7" illusion to the user, while keeping the cost of leasing the "HW" resources is kept to a minimum. "HW" in this context is virtual as it is provided by a "Cloud provider" like Amazon WebServices.

Play Framework

The AccountIT application will be based on the Play Framework. Play provides a UI framework that has support for HTML5 and JavaScript making it possible to provide a modern web-application feel. The framework provides a developer friendly environment making it easy to develop and test - this is the primary reason for choosing this framework. Development can be done in both Java and Scala - giving possibility to mix Object Oriented with Functional Programming.

Messaging

Messaging is used to build a asynchronous system based on the CQRS pattern. The commands represent update requests, while query represent search requests. RabbitMQ will also be used to integrate between AccuntIT and supporting business systems (such as CRM, ERP, support and sales systems). But also between supporting business systems.

In memory data-grid

Caching mechanism have been used in almost any component of a system to hold configuration data used by the component. E.g. a web-application may cache static information to reduce response time. To support clustered caching, in memory data-grid have been developed, providing with the benefits of caching distributed to all members of a cluster.

Datastore

Datastore have traditionally been implemented using relational databases (RDBMS), but these have traditionally lacked the support for horizontal scalability, and thus we shall use NoSQL datastores. But new datastore solutions seems to bridge the gap - e.g. VoltDB, which also provides "in-memory database" capabilities.

Monitoring

With a system landscape consisting of nodes in clusters monitoring occurs at two levels:

- At node level with each node exposing monitoring information through a monitoring agent. A surveillance / monitoring tool used by IT operations can collect the information provided by the monitoring agent

- An aggregated view of the cluster of nodes, displaying the overall state of the cluster of some node. The monitoring aggreate is hosted by the "Management Node"

The external interface of a monitoring agent is provided by http://jolokia.org, which exposes JMX MBean information via a JSON over HTTP. Jolokia is packaged within Hawtio, which additionally provides a Web UI to the JMX MBean information that jolokia provides. Thus each node as well as the aggreated information is available via a web-browser through Hawtio.

For non-java applications like RabbitMQ and RIAK, a REST interface will be required, such that the Management node can request state of the node, and produce an aggregated result that can be provided to a monitoring system.

Deployment

With cloud hosting the deployment has to be automated, as spawning a new instance and deploying the system is done via a API. There are two distinct strategies regarding deployment:

- deployment system using an agent to deploy on the particular instance. Puppet is an example of this, where an image with the bare-bone OS and Puppet agent are deployed. When the Puppet agent starts it will look-up the Puppet master and request for its node configuration. The Puppet agent would periodically check with the Puppet master of its configuration and based on this update the node setup.

- deployment of an image with the complete system. Packer is an example of this, where an image is "baked" with all the required components. The image is spawned when a node is requested from the cloud system.

Each of the two have pros and cons, and the choice will reflect the priority these have.